Web to extract the header row from the array, add another compose action & rename it as 'csv header'. We have a requirement where we need to run all the json payloads in different files in a. Web python script to read all json files from a folder in the airflow project. Image source directed acyclic graphs (dags) are used in airflow to create workflows. This is an example of a filenotfounderror if i misspell the fcc.json.

Web the yaml/json file contents to be loaded rather than the file path. Web what are dags? Web with open('fcc.json', 'r') as fcc_file: This is an example of a filenotfounderror if i misspell the fcc.json. Web python script to read all json files from a folder in the airflow project.

If the file cannot be opened, then we will receive an oserror. Web the yaml/json file contents to be loaded rather than the file path. Web this post will explore everything around parquet in cloud computing services, optimized s3 folder structure, adequate size of partitions, when, why and how. Web class localfilesystembackend (basesecretsbackend, loggingmixin): Web python script to read all json files from a folder in the airflow project.

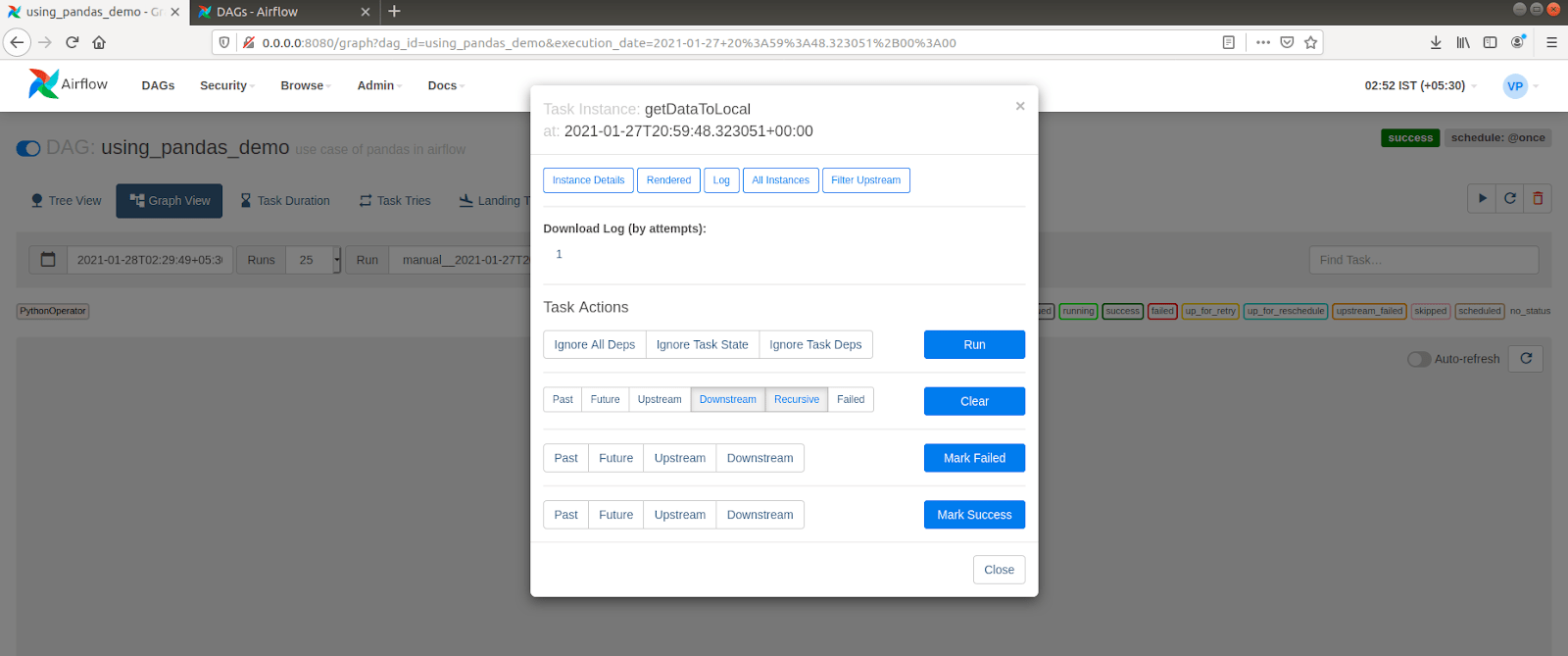

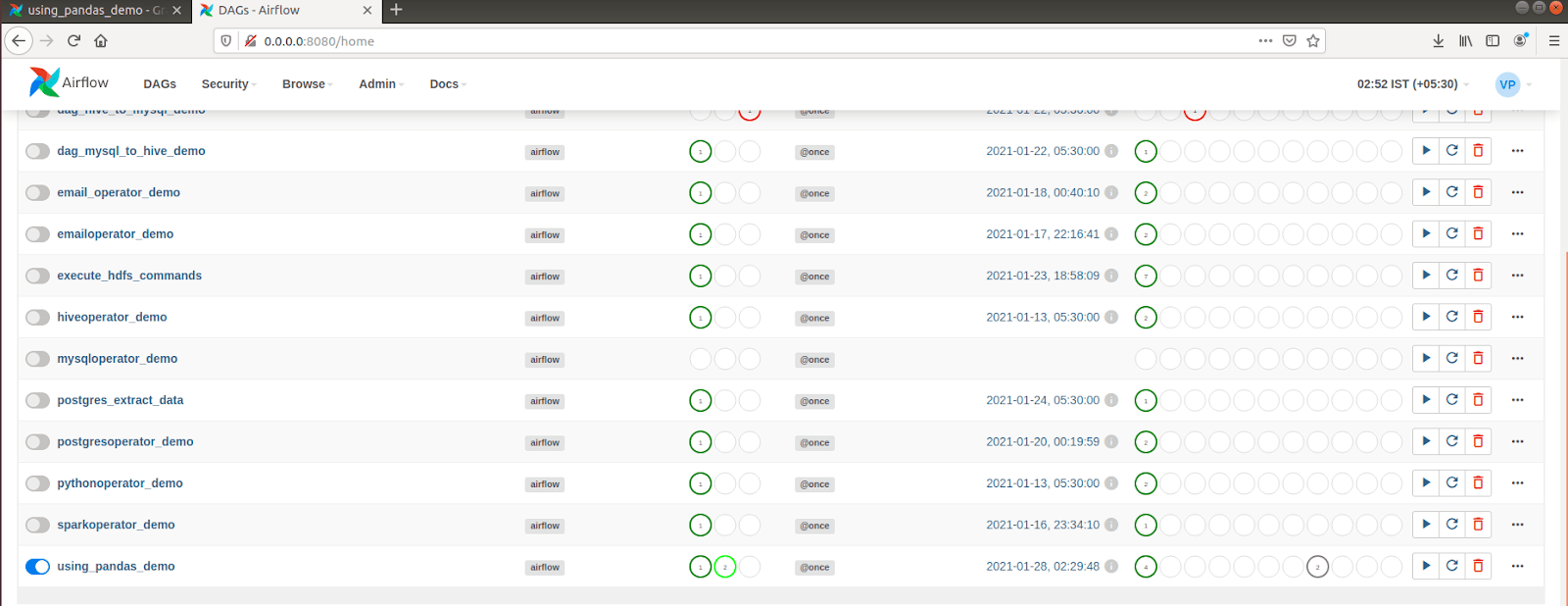

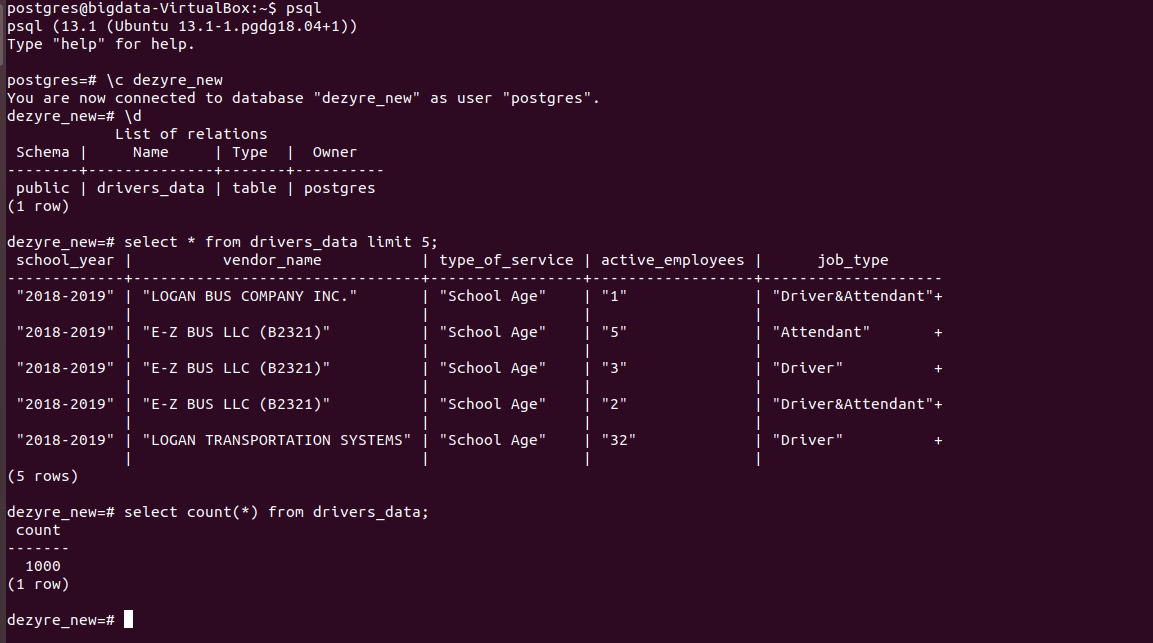

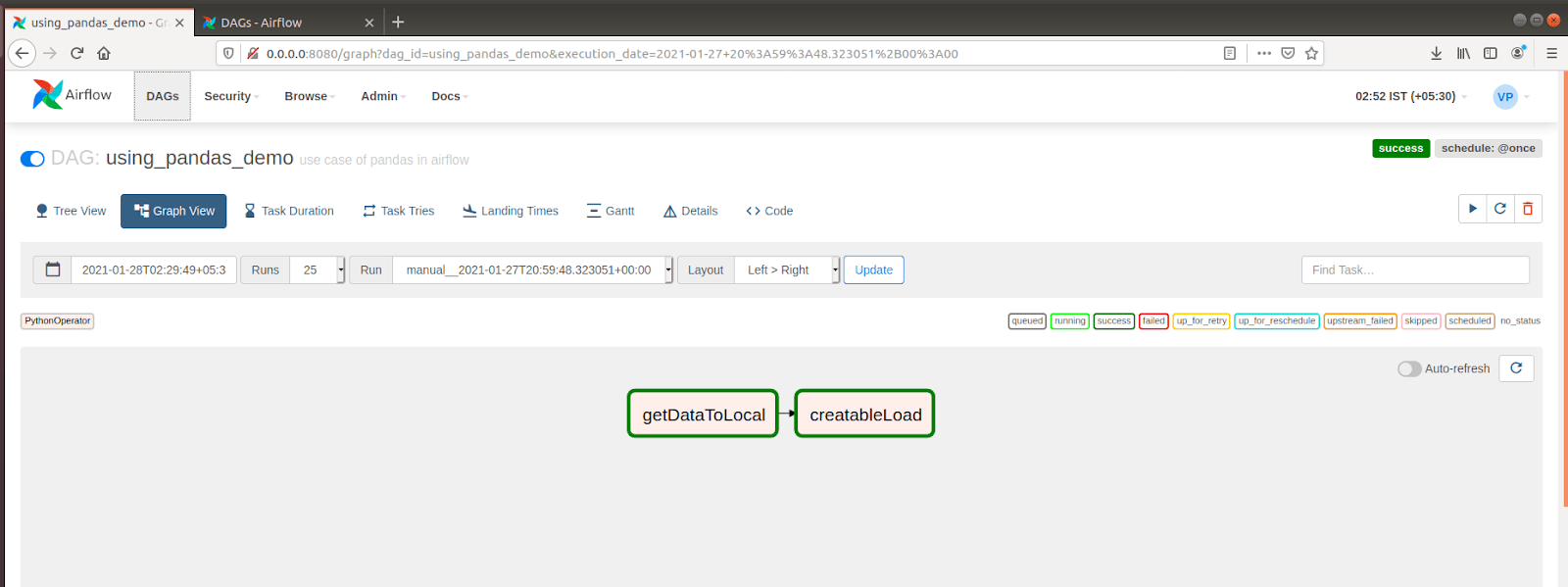

``json``, `yaml` and ``.env`` files. Web # open the orders.json file with open(orders.json) as file: (templated) object data for objects in the bucket resides in physical storage within this region. Web class localfilesystembackend (basesecretsbackend, loggingmixin): # open the file with open ('myfile.json', 'r') as f: The lone step in the extract stage is to simply request the latest dataset. Web from what i've gathered, the only way to solve this issue in airflow 1.x is to deserialise the string used json.loads somewhere in the operator code. Image source directed acyclic graphs (dags) are used in airflow to create workflows. Web this post will explore everything around parquet in cloud computing services, optimized s3 folder structure, adequate size of partitions, when, why and how. Create a variable with a. retrieves connection objects and variables from local files. This happens because open & read functions are not used to get the file. Here, also writes an expression for extracting the header row from the array (the. Web with open('fcc.json', 'r') as fcc_file: Web here in this scenario, we will schedule a dag file that will read the json from the api by request url and get the json data using pandas.

# Load The Json Data Data =.

Web take configuration files for example. Web let’s begin our pipeline by creating the covid_data.py file in our airflow/dags directory. If the file cannot be opened, then we will receive an oserror. With dag(dag_id=dag_name, default_args=dag_args) as dag:

Web From What I've Gathered, The Only Way To Solve This Issue In Airflow 1.X Is To Deserialise The String Used Json.loads Somewhere In The Operator Code.

Web load json data into postgres table using airflow ask question asked 10 months ago modified 10 months ago viewed 596 times 0 i have an airflow dag that. Web to extract the header row from the array, add another compose action & rename it as 'csv header'. Web with open('fcc.json', 'r') as fcc_file: (templated) object data for objects in the bucket resides in physical storage within this region.

Web Python Script To Read All Json Files From A Folder In The Airflow Project.

retrieves connection objects and variables from local files. Web here are a few best practices with airflow variables: Web and i want to be able to read it in python so i have done the following program: Web the yaml/json file contents to be loaded rather than the file path.

``Json``, `Yaml` And ``.Env`` Files.

Web here in this scenario, we will schedule a dag file that will read the json from the api by request url and get the json data using pandas. Web this post will explore everything around parquet in cloud computing services, optimized s3 folder structure, adequate size of partitions, when, why and how. The lone step in the extract stage is to simply request the latest dataset. Web it can be used if using pythonoperator and provide a callable def az_upload(json_file_path):