Web each operation that modifies a delta lake table creates a new table version. It stores data in parquet file format which is columnar storage. Web november 03, 2023 this article provides details for the delta live tables python programming interface. Tables often filtered by high. Databricks sql databricks runtime returns the create table statement or create view statement that was used to create a.

Web the following code example demonstrates how to call the databricks sql connector for python to run a basic sql command on a cluster or sql warehouse. Web is used a little py spark code to create a delta table in a synapse notebook. Delta tables are typically used for. By default, all tables created in azure databricks are delta tables. Web there are many features of delta table, here are listing some of them:

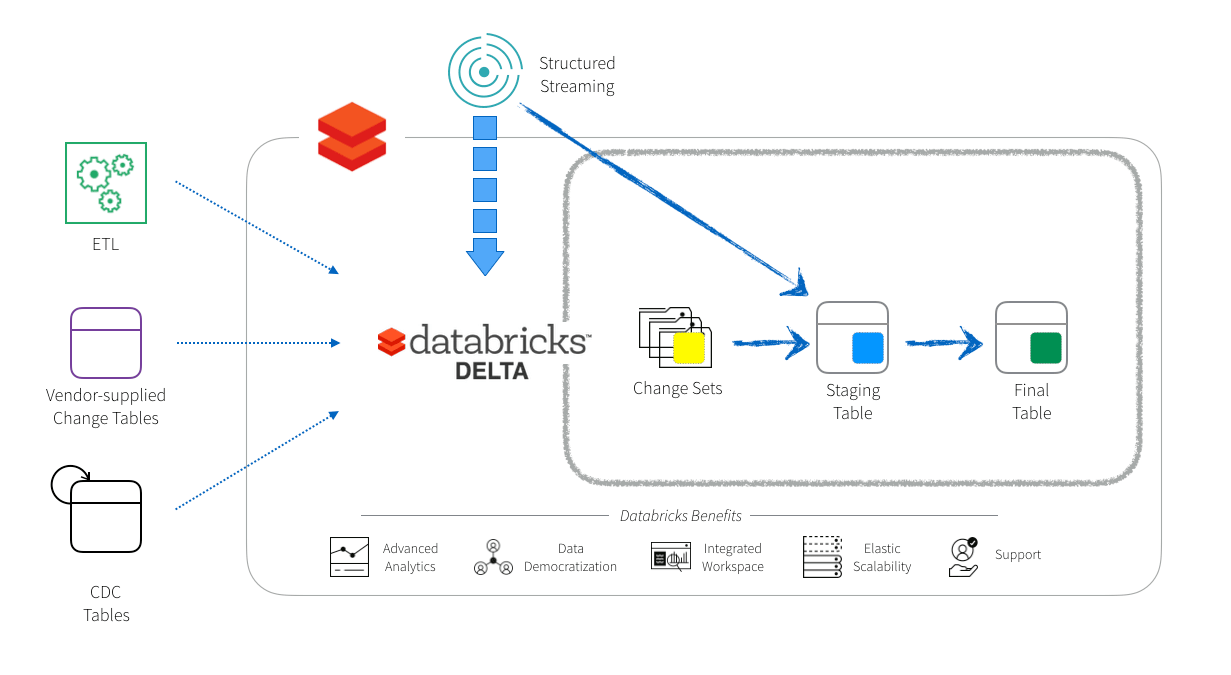

Web october 12, 2023 delta lake is deeply integrated with spark structured streaming through readstream and writestream. Delta lake overcomes many of the limitations typically. It stores data in parquet file format which is columnar storage. Web databricks uses delta lake for all tables by default. For information on the sql api, see the delta live tables sql.

Web is used a little py spark code to create a delta table in a synapse notebook. Web each operation that modifies a delta lake table creates a new table version. Python python # load the data from its source. Create table <<strong>table</strong>_name> ( , ,.) using.</p> Web there are many features of delta table, here are listing some of them: Tables often filtered by high. While a streaming query is active against a delta table, new records are. The following are examples of scenarios that benefit from clustering: Delta lake overcomes many of the limitations typically. Web november 03, 2023 this article provides details for the delta live tables python programming interface. By default, all tables created in azure databricks are delta tables. Databricks sql databricks runtime returns the create table statement or create view statement that was used to create a. Web delta table as a source structured streaming incrementally reads delta tables. Web in this article. For information on the sql api, see the delta live tables sql.

For Every Delta Table Property You Can Set A Default Value For New Tables Using A Sparksession.

Web the following code example demonstrates how to call the databricks sql connector for python to run a basic sql command on a cluster or sql warehouse. Web delta table is the default data table format in azure databricks and is a feature of the delta lake open source data framework. Web databricks uses delta lake for all tables by default. Web each operation that modifies a delta lake table creates a new table version.

Create Table <<Strong>Table</Strong>_Name> ( , ,.) Using.</P>

Web october 12, 2023 delta lake is deeply integrated with spark structured streaming through readstream and writestream. Web in this article. Web delta table as a source structured streaming incrementally reads delta tables. Delta tables are based on the delta lake open source project, a framework for.

Web Is Used A Little Py Spark Code To Create A Delta Table In A Synapse Notebook.

Web there are many features of delta table, here are listing some of them: Web delta lake is the default for all reads, writes, and table creation commands azure databricks. By default, all tables created in azure databricks are delta tables. Web if the delta lake table is already stored in the catalog (aka the metastore), use ‘read_table’.

Whether You’re Using Apache Spark Dataframes Or Sql, You Get All The Benefits Of Delta Lake Just By Saving Your Data To The.

It stores data in parquet file format which is columnar storage. Delta lake overcomes many of the limitations typically. Web all tables on databricks are delta tables by default. An azure databricks cluster is a set of computation resources and configurations on which you run data engineering, data science, and data analytics.