Web the following examples demonstrate loading json to create delta live tables tables: Web a simple example using scala & sql will help you to understand the delta lake features. Val mytable = deltatable.forpath (mypath). Web databricks recommends using auto loader with delta live tables for most data ingestion tasks from cloud object storage. You define the transformations to.

If the delta lake table is already stored in the catalog (aka the metastore), use ‘read_table’. While a streaming query is active against a delta table, new records are processed idempotently as new table versions. Web databricks uses delta lake for all tables by default. Web the minimum required protocol reader version for a reader that allows to read from this delta table. Table ( table_name ) display ( people_df ).

Web there are many features of delta table, here are listing some of them: Web you access data in delta tables by the table name or the table path, as shown in the following examples: You can easily load tables to dataframes, such as in the following example: Auto loader and delta live tables are designed to. Its better to read in.

Web there are many features of delta table, here are listing some of them: Whether you’re using apache spark dataframes or sql, you get all the benefits of delta lake just by saving your data to the. An unmanaged delta table is dropped and the real data still there. Web this guide will demonstrate how delta live tables enables you to develop scalable, reliable data pipelines that conform to the data quality standards of a lakehouse architecture. You can easily load tables to dataframes, such as in the following example: Val mytable = deltatable.forpath (mypath). Web to load a delta table into a pyspark dataframe, you can use the spark.read.delta () function. Web you access data in delta tables by the table name or the table path, as shown in the following examples: It stores data in parquet file format which is columnar storage. Its better to read in. Web the minimum required protocol reader version for a reader that allows to read from this delta table. If the delta lake table is already stored in the catalog (aka the metastore), use ‘read_table’. Steps 1 thru 5 are common for scala and sql. In order to access the delta table from sql you have to register it in the metabase, eg. Web the following examples demonstrate loading json to create delta live tables tables:

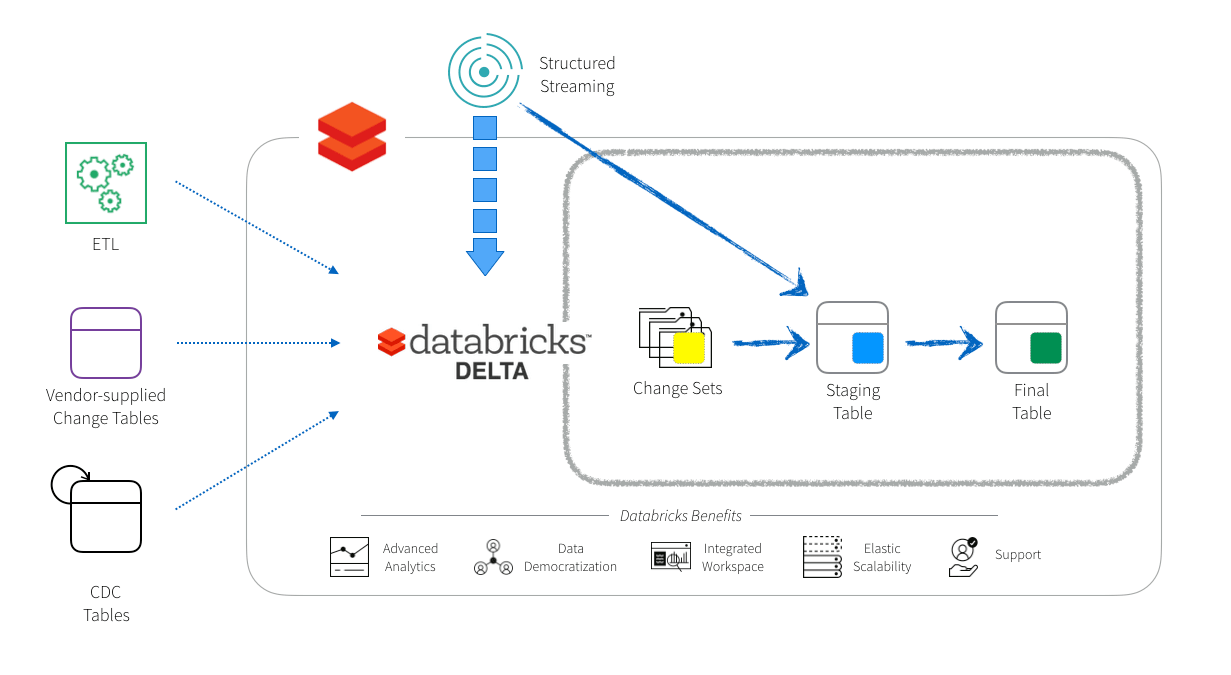

Web Read Delta Sharing Shared Tables Using Structured Streaming Other Options For Querying Shared Data You Can Also Create Queries That Use Shared Table Names In Delta Sharing.

Web go to solution. Web for creating a delta table, below is the template: While a streaming query is active against a delta table, new records are processed idempotently as new table versions. Web the following examples demonstrate loading json to create delta live tables tables:

For Example, The Following Code Loads The Delta Table.

Web structured streaming incrementally reads delta tables. Web read a delta lake table on some file system and return a dataframe. See how does databricks manage delta lake feature compatibility?. Web all tables on databricks are delta tables by default.

Web There Are Many Features Of Delta Table, Here Are Listing Some Of Them:

Table ( table_name ) display ( people_df ). An unmanaged delta table is dropped and the real data still there. It stores data in parquet file format which is columnar storage. Web 2 answers sorted by:

Web To Load A Delta Table Into A Pyspark Dataframe, You Can Use The Spark.read.delta () Function.

You define the transformations to. Auto loader and delta live tables are designed to. Whether you’re using apache spark dataframes or sql, you get all the benefits of delta lake just by saving your data to the. Web databricks uses delta lake for all tables by default.