Printed the first five rows of the dataframe. I am able to read single file. Web save dataframe to csv directly to s3 python ask question asked 7 years, 4 months ago modified 8 months ago viewed 285k times part of aws collective 206 i have a pandas. Web here is my full code: S3fs is a pythonic file interface to.

Web spark sql provides spark.read.csv (path) to read a csv file from amazon s3, local file system, hdfs, and many other data sources into spark dataframe and. Web save dataframe to csv directly to s3 python ask question asked 7 years, 4 months ago modified 8 months ago viewed 285k times part of aws collective 206 i have a pandas. Web read csv file (s) from a received s3 prefix or list of s3 objects paths. Using a jupyter notebook on a local machine, i walkthrough some useful optional p. Web read csv file using pandas read_csv.

Import pandas as pd import boto data =. In this tutorial, you’ll learn how to write pandas dataframe as. S3fs is a pythonic file interface to. Web 1 since you're already using smart_open, just do data = pd.read_csv (smart_open.smart_open ('s3://randomdatagossip/gossips.csv')). Df = pd.read_csv ('data.csv') print(df.to_string ()) try it yourself ».

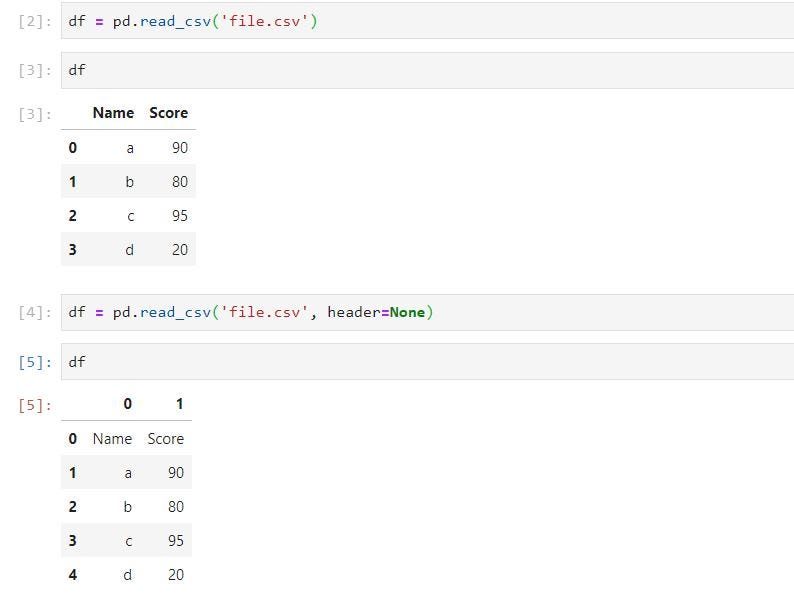

Boto3 performance is a bottleneck with parallelized loads. Web pandas pandas (starting with version 1.2.0) supports the ability to read and write files stored in s3 using the s3fs python package. Printed the first five rows of the dataframe. Web here is my full code: The string could be a url. I am able to read single file. Web in this post we shall see how to read a csv file from s3 bucket and load it into a pandas data frame. Web read csv file using pandas read_csv. Web reading a large csv from a s3 bucket using python pandas in aws sagemaker asked 5 years, 9 months ago modified 2 years, 10 months ago viewed 19k. Web 1 since you're already using smart_open, just do data = pd.read_csv (smart_open.smart_open ('s3://randomdatagossip/gossips.csv')). Using a jupyter notebook on a local machine, i walkthrough some useful optional p. Web example get your own python server. Web i am trying to read a csv file located in an aws s3 bucket into memory as a pandas dataframe using the following code: S3fs is a pythonic file interface to. Df = pd.read_csv ('data.csv') print(df.to_string ()) try it yourself ».

Web Here Is My Full Code:

Web i am trying to read a csv file located in an aws s3 bucket into memory as a pandas dataframe using the following code: Import pandas as pd import boto data =. Web this tutorial walks how to read multiple csv files into python from aws s3. In this example we first set our aws credentials and region, as well as the s3 bucket and file path for the csv file.

Boto3 Performance Is A Bottleneck With Parallelized Loads.

Before using this function, we must import the pandas library, we will load the csv file using pandas. Web 1 since you're already using smart_open, just do data = pd.read_csv (smart_open.smart_open ('s3://randomdatagossip/gossips.csv')). Web read csv file (s) from a received s3 prefix or list of s3 objects paths. Load the csv into a dataframe:

Web Reading A Large Csv From A S3 Bucket Using Python Pandas In Aws Sagemaker Asked 5 Years, 9 Months Ago Modified 2 Years, 10 Months Ago Viewed 19K.

S3fs is a pythonic file interface to. But there’s a lot more to the. The string could be a url. In this tutorial, you’ll learn how to write pandas dataframe as.

I Am Able To Read Single File.

Web spark sql provides spark.read.csv (path) to read a csv file from amazon s3, local file system, hdfs, and many other data sources into spark dataframe and. Web save dataframe to csv directly to s3 python ask question asked 7 years, 4 months ago modified 8 months ago viewed 285k times part of aws collective 206 i have a pandas. Web this tutorial will teach you how to read a csv file from an s3 bucket in aws lambda using the requests library or the boto3 library. Web pandas pandas (starting with version 1.2.0) supports the ability to read and write files stored in s3 using the s3fs python package.