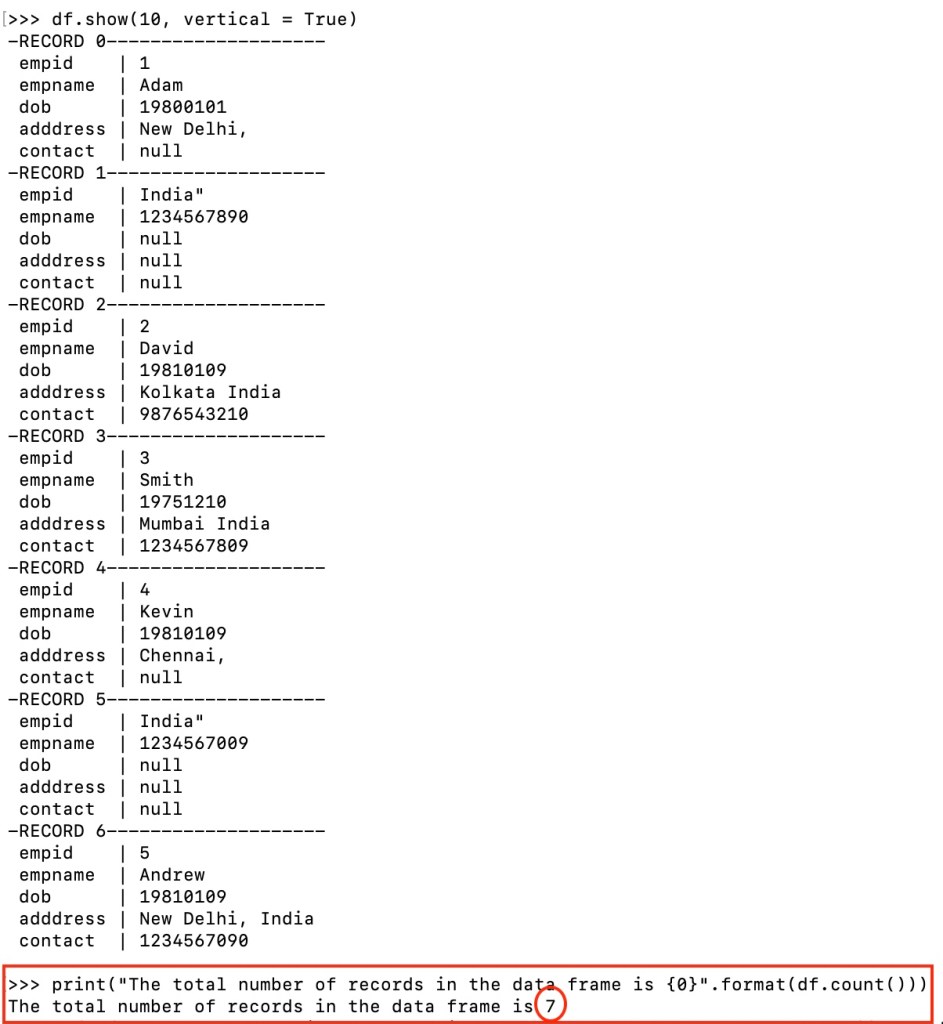

Web reading multiple csv files from azure blob storage using databricks pyspark. Textfile ('python/test_support/sql/ages.csv') >>> df2 =. Dataframe.describe (*cols) computes basic statistics. Web first, let’s create a dataframe by reading a csv file. Dtypes [('_c0', 'string'), ('_c1', 'string')] >>> rdd = sc.

When i load this file in another notebook. Pyspark read multiple csv files at once. Also, you need to understand if. In the aws management console,. Web you can use the spark.read.csv () function to read a csv file into a pyspark dataframe.

Web pyspark read csv file using the csv () method to read a csv file to create a pyspark dataframe, we can use the dataframe.csv () method. Dataframe.describe (*cols) computes basic statistics. Web pyspark read csv provides a path of csv to readers of the data frame to read csv file in the data frame of pyspark for saving or writing in the csv file. The path string storing the csv file to be read. Web you can use the spark.read.csv () function to read a csv file into a pyspark dataframe.

From pyspark.sql import sqlcontext import pyspark from. Cant seem to have it read. Web here we are going to read a single csv into dataframe using spark.read.csv and then create dataframe with this data using.topandas (). Df= spark.read.format(csv).option(multiline, true).option(quote, \).option(escape, \).option(header,true).load(df_path). Textfile ('python/test_support/sql/ages.csv') >>> df2 =. Dataframe.describe (*cols) computes basic statistics. Web you know that from the files that are in csv format, you need to design and understand functional dependencies between columns. Web 1 i am saving data to a csv file from a pandas dataframe with 318477 rows using df.to_csv (preprocessed_data.csv). Web first, textfile exists on the sparkcontext (called sc in the repl), not on the sparksession object (called spark in the repl). The read.csv () function present in pyspark allows you to read a csv file and save this file in a pyspark dataframe. Web >>> df = spark. Dtypes [('_c0', 'string'), ('_c1', 'string')] >>> rdd = sc. Web read your csv file in such the way: When i load this file in another notebook. Sep str, default ‘,’ delimiter to use.

Also, You Need To Understand If.

Web ec2 provides scalable computing capacity in the cloud and will host your pyspark applications. Sep str, default ‘,’ delimiter to use. Web here we are going to read a single csv into dataframe using spark.read.csv and then create dataframe with this data using.topandas (). Df= spark.read.format(csv).option(multiline, true).option(quote, \).option(escape, \).option(header,true).load(df_path).

Web New To Pyspark And Would Like To Read Csv File To Dataframe.

Web import csv file contents into pyspark dataframes ask question asked 7 years, 3 months ago modified 4 years, 5 months ago viewed 111k times 14 how can i. Web pyspark read csv provides a path of csv to readers of the data frame to read csv file in the data frame of pyspark for saving or writing in the csv file. Dataframe.describe (*cols) computes basic statistics. Web pyspark read csv file using the csv () method to read a csv file to create a pyspark dataframe, we can use the dataframe.csv () method.

Web You Know That From The Files That Are In Csv Format, You Need To Design And Understand Functional Dependencies Between Columns.

Web reading multiple csv files from azure blob storage using databricks pyspark. To set up an ec2 instance: I want to read and process these csv files with a parallel. Web read your csv file in such the way:

Web 1 I Am Saving Data To A Csv File From A Pandas Dataframe With 318477 Rows Using Df.to_Csv (Preprocessed_Data.csv).

Second, for csv data, i would. Cant seem to have it read. Web in this tutorial, you have learned how to read a csv file, multiple csv files and all files from a local folder into spark dataframe, using multiple options to change. Web csv is a widely used data format for processing data.