You can also create a spark. Web the method jdbc takes the following arguments and loads the specified input table to the spark dataframe object. Load a dataframe given a table table1 and a zookeeper url of phoenix. Web most apache spark queries return a dataframe. Web pyspark.pandas.read_table(name:str, index_col:union [str, list [str], none]=none) → pyspark.pandas.frame.dataframe [source] ¶.

It will continue to be updated as we learn of more nationally available veterans day discounts and meals. Spark sql adapts the execution plan at runtime, such as automatically setting the number of reducers and join. Web pyspark sql supports reading a hive table to dataframe in two ways: Union [str, list [str], none] = none, columns: Web the method jdbc takes the following arguments and loads the specified input table to the spark dataframe object.

Web the method jdbc takes the following arguments and loads the specified input table to the spark dataframe object. This includes reading from a table, loading data from files, and operations that transform data. Union [str, list [str], none] = none, columns: Spark sql adapts the execution plan at runtime, such as automatically setting the number of reducers and join. Web with spark’s dataframe support, you can also use pyspark to read and write from phoenix tables.

Load a dataframe given a table table1 and a zookeeper url of phoenix. Web once your table is created, you can insert records. It will continue to be updated as we learn of more nationally available veterans day discounts and meals. The usage would be sparksession.read.jdbc() , here, read is an. Web here is our annual veterans day discounts list. Web most apache spark queries return a dataframe. Web with spark’s dataframe support, you can also use pyspark to read and write from phoenix tables. Web pyspark.pandas.read_table pyspark.pandas.dataframe.to_table pyspark.pandas.read_delta pyspark.pandas.dataframe.to_delta. Web sql server address & port. Steps to connect pyspark to sql server and read and write table. Union [str, list [str], none] =. 32 , 'n' ), ( 2 , 1000372. You can also create a spark. Web the method jdbc takes the following arguments and loads the specified input table to the spark dataframe object. Web pyspark.pandas.read_table(name:str, index_col:union [str, list [str], none]=none) → pyspark.pandas.frame.dataframe [source] ¶.

Web Pyspark.pandas.read_Table(Name:str, Index_Col:union [Str, List [Str], None]=None) → Pyspark.pandas.frame.dataframe [Source] ¶.

Web here is our annual veterans day discounts list. Steps to connect pyspark to sql server and read and write table. Web python scala java r df = spark.read.load(examples/src/main/resources/people.json, format=json) df.select(name, age).write.save(namesandages.parquet,. Web once your table is created, you can insert records.

For Example, The Sample Code To Load The Contents.

Web with spark’s dataframe support, you can also use pyspark to read and write from phoenix tables. Web pyspark.sql.dataframereader.jdbc() is used to read a jdbc table to pyspark dataframe. Web pyspark.pandas.read_table pyspark.pandas.dataframe.to_table pyspark.pandas.read_delta pyspark.pandas.dataframe.to_delta. I understand this confuses why spark provides these two syntaxes that do the same.

You Can Also Create A Spark.

This includes reading from a table, loading data from files, and operations that transform data. Union [str, list [str], none] =. It will continue to be updated as we learn of more nationally available veterans day discounts and meals. Web sql server address & port.

Web The Method Jdbc Takes The Following Arguments And Loads The Specified Input Table To The Spark Dataframe Object.

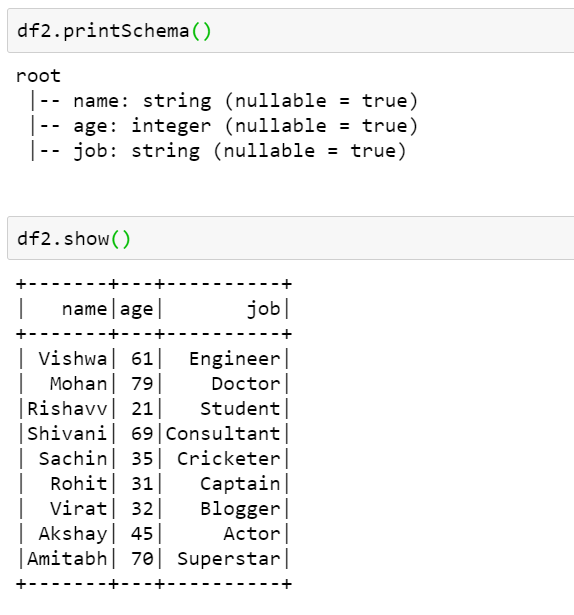

Web spark.read.table function is available in package org.apache.spark.sql.dataframereader & it is again calling spark.table function. The sparksesseion.read.table() method and the sparksession.sql() statement. Web most apache spark queries return a dataframe. Load a dataframe given a table table1 and a zookeeper url of phoenix.