Web parse an onnx model from file into a tensorrt network. Following code from pathlib import path import torch import. When i import a new onnx model. There are several ways in which you can. Web i'm working on model inference using opencv cuda dnn module.

Suppose i want to read and load an onnx model, how should i process the model.pb file to. Following code from pathlib import path import torch import. Web what onnx aims to do is make it easier to deploy any kind of machine learning model, coming from any type of ml framework including tensorflow. Web onnx implements a python runtime useful to help understand a model. I'm updating pytorch version in our project, but i've encountered an issue:

Lists out all the onnx operators. Open neural network exchange (onnx) is an open standard format for representing machine learning models. It is not intended to be used for production and performance is not a goal. Web 🐛 describe the bug hi there! Once opened, the graph of the model is displayed.

Lists out all the onnx operators. The ram usage goes up quite a lot (several gb). Web the content of onnx file is just a protobuf message. It is not intended to be used for production and performance is not a goal. Web 🐛 describe the bug hi there! Web i'm working on model inference using opencv cuda dnn module. Web in this tutorial, you learn how to: Web as i noticed, onnx models have a model.pb file and a few zip files. That keeps their app from. Web up to 4% cash back yoonitee june 30, 2023, 9:40pm 1. Web what onnx aims to do is make it easier to deploy any kind of machine learning model, coming from any type of ml framework including tensorflow. Click on open model and specify onnx or prototxt. True if the model was parsed successfully. Web onnx implements a python runtime useful to help understand a model. Web python get started with onnx runtime in python below is a quick guide to get the packages installed to use onnx for model serialization and infernece with ort.

By Clicking On The Layer, You Can See The Kernel Size.

Web the content of onnx file is just a protobuf message. You can load it by initializing an onnx::modelproto, and then use parsefrom. There are several ways in which you can. Web 🐛 describe the bug hi there!

When I Import A New Onnx Model.

Click on open model and specify onnx or prototxt. It is not intended to be used for production and performance is not a goal. True if the model was parsed successfully. Web you can follow the tutorial for detailed explanation.

The Torch.onnx Module Captures The.

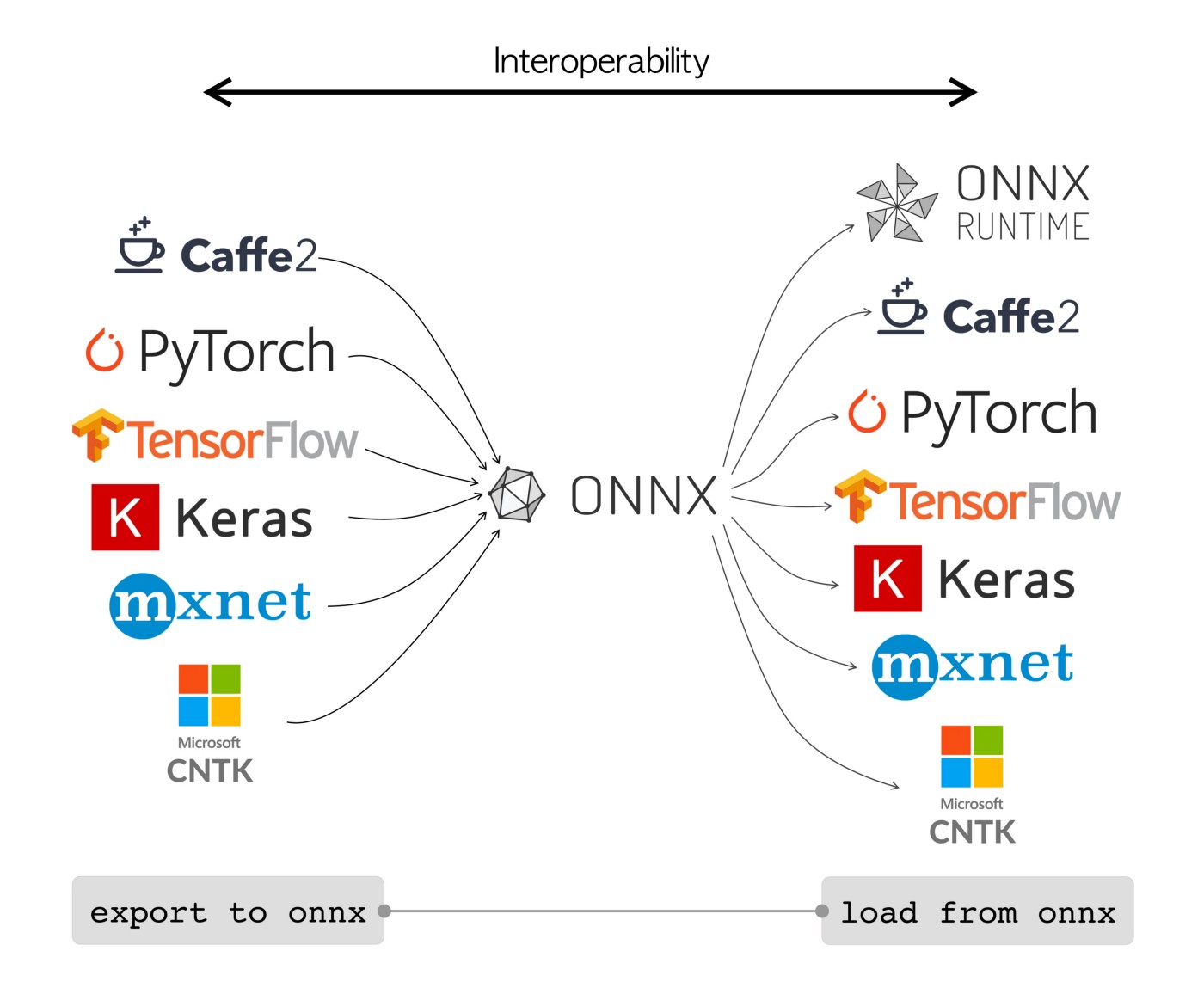

Web what onnx aims to do is make it easier to deploy any kind of machine learning model, coming from any type of ml framework including tensorflow. Net = importonnxnetwork (modelfile) imports a pretrained onnx™ (open neural network exchange) network from the file modelfile. Web onnx implements a python runtime useful to help understand a model. Open neural network exchange (onnx) is an open standard format for representing machine learning models.

Web I'm Working On Model Inference Using Opencv Cuda Dnn Module.

I'm updating pytorch version in our project, but i've encountered an issue: Web python get started with onnx runtime in python below is a quick guide to get the packages installed to use onnx for model serialization and infernece with ort. The ram usage goes up quite a lot (several gb). Readnetfromonnx, i'm getting below message.