Web post last modified: Web i want to create a df of text files where each row represents a whole txt file in a column named text. The snowpark library provides an intuitive library for querying and processing data at scale in snowflake. Web so, we are using utils.runquery method to run the show command, capture its results in a transient table and then, we are using the spark.read.format to. Web # the following example applies to databricks runtime 11.3 lts and above.

I know how to read from snowflake table with spark connector like below: The snowflake connector for spark (“spark connector”) now uses the. Web spark scala read text file into dataframe. The snowpark library provides an intuitive library for querying and processing data at scale in snowflake. I am reading a text file a storing into an rdd [array [string]].

I know how to read from snowflake table with spark connector like below: Web so, we are using utils.runquery method to run the show command, capture its results in a transient table and then, we are using the spark.read.format to. Df = spark.read.format (snowflake) \.options (**sfparams) # this is a dict with. Web # the following example applies to databricks runtime 11.3 lts and above. Web you might need to add snowflake_source_name = net.snowflake.spark.snowflake before you create your data frame.

Web you might need to add snowflake_source_name = net.snowflake.spark.snowflake before you create your data frame. Web step 1 the first thing you need to do is decide which version of the ssc you would like to use and then go find the scala and spark version that is compatible with it. Web spark scala read text file into dataframe. I am reading a text file a storing into an rdd [array [string]]. Before we dive in, make sure you have the following installed: Web # the following example applies to databricks runtime 11.3 lts and above. Val snowflake_table = spark.read.format (snowflake).option (host, hostname). Web snowflake connector for spark version 2.6 turbocharges reads with apache arrow. Here a simple snippet that might help. Using a library for any of three languages, you. Web dept_df = spark.read.format(‘jdbc’).option(‘url’, ‘jdbc:oracle:thin:scott/scott@//localhost:1522/oracle’).option(‘dbtable’,. Web so, we are using utils.runquery method to run the show command, capture its results in a transient table and then, we are using the spark.read.format to. I've tried the following but i got a df where the text is separated by. Web lets explore how to connect to snowflake using pyspark, and read and write data in various ways. Web the databricks version 4.2 native snowflake connector allows your databricks account to read data from and write data to snowflake without importing any libraries.

Val Snowflake_Table = Spark.read.format (Snowflake).Option (Host, Hostname).

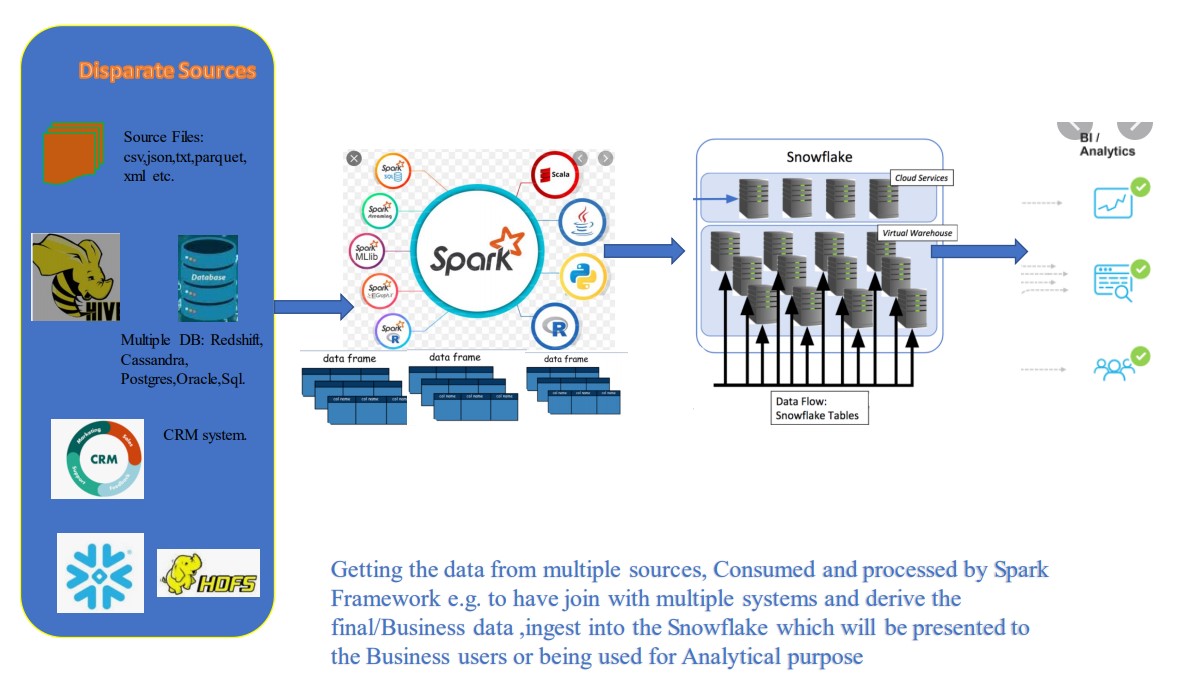

Web post last modified: Web the snowflake connector for spark (“spark connector”) brings snowflake into the apache spark ecosystem, enabling spark to read data from, and write data to, snowflake. Web the databricks version 4.2 native snowflake connector allows your databricks account to read data from and write data to snowflake without importing any libraries. I've tried the following but i got a df where the text is separated by.

From Spark’s Perspective, Snowflake Looks Similar To Other Spark Data Sources (Postgresql, Hdfs, S3, Etc.).

The spark.read () is a method used to read data from various. The snowpark library provides an intuitive library for querying and processing data at scale in snowflake. Web so, we are using utils.runquery method to run the show command, capture its results in a transient table and then, we are using the spark.read.format to. Web # the following example applies to databricks runtime 11.3 lts and above.

The Snowflake Connector For Spark (“Spark Connector”) Now Uses The.

Web step 1 the first thing you need to do is decide which version of the ssc you would like to use and then go find the scala and spark version that is compatible with it. Web spark scala read text file into dataframe. Web lets explore how to connect to snowflake using pyspark, and read and write data in various ways. Spark provides several read options that help you to read files.

Web You Might Need To Add Snowflake_Source_Name = Net.snowflake.spark.snowflake Before You Create Your Data Frame.

Web dept_df = spark.read.format(‘jdbc’).option(‘url’, ‘jdbc:oracle:thin:scott/scott@//localhost:1522/oracle’).option(‘dbtable’,. I know how to read from snowflake table with spark connector like below: I am reading a text file a storing into an rdd [array [string]]. Web i want to create a df of text files where each row represents a whole txt file in a column named text.