Web parameters path str, list or rdd. Web pyspark json data source provides multiple options to read files in different options, use multiline option to read json files scattered across multiple. Web this conversion can be done using sparksession.read.json () on either a dataset [string] , or a json file. Action n threshold0 => action n action x. Lets say the folder has 5 json files but we need to read only 2.

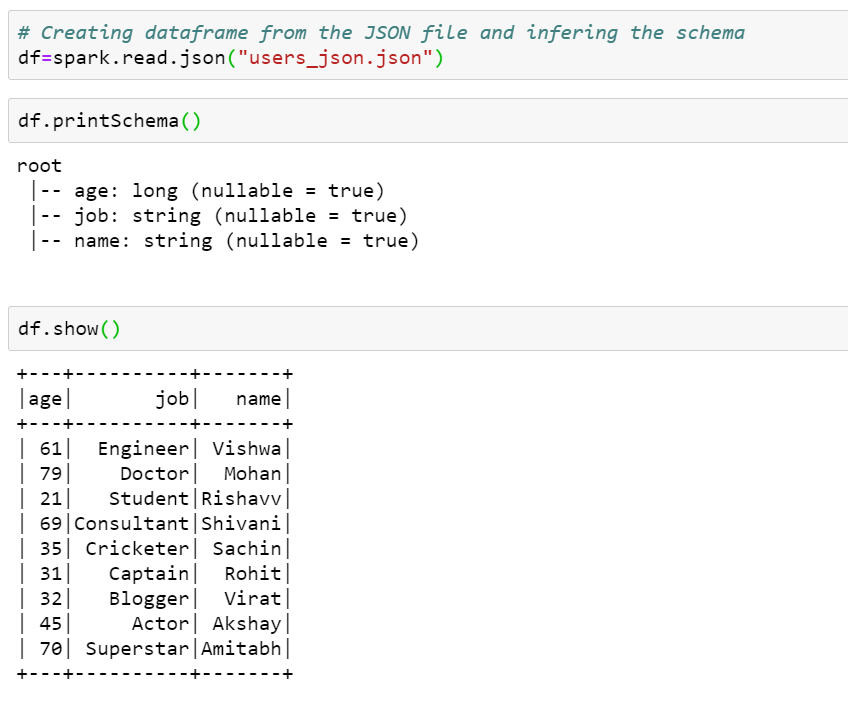

Web to read a csv file you must first create a dataframereader and set a number of options. Read and parse a json from a text file. This conversion can be done using sparksession.read.json on a json file. Web parameters path str, list or rdd. String represents path to the json dataset, or a list of.

This conversion can be done using sparksession.read.json on a json file. Web spark sql provides spark.read ().csv (file_name) to read a file or directory of files in csv format into spark dataframe, and dataframe.write ().csv (path) to write to a csv file. You can read data from hdfs ( hdfs:// ), s3 ( s3a:// ), as well as the local file system ( file:// ). Read and parse a json from a text file. Web # input source folder having multiple json files will result in multiple rows data_sdf = spark.read.option ('multiline', 'true').

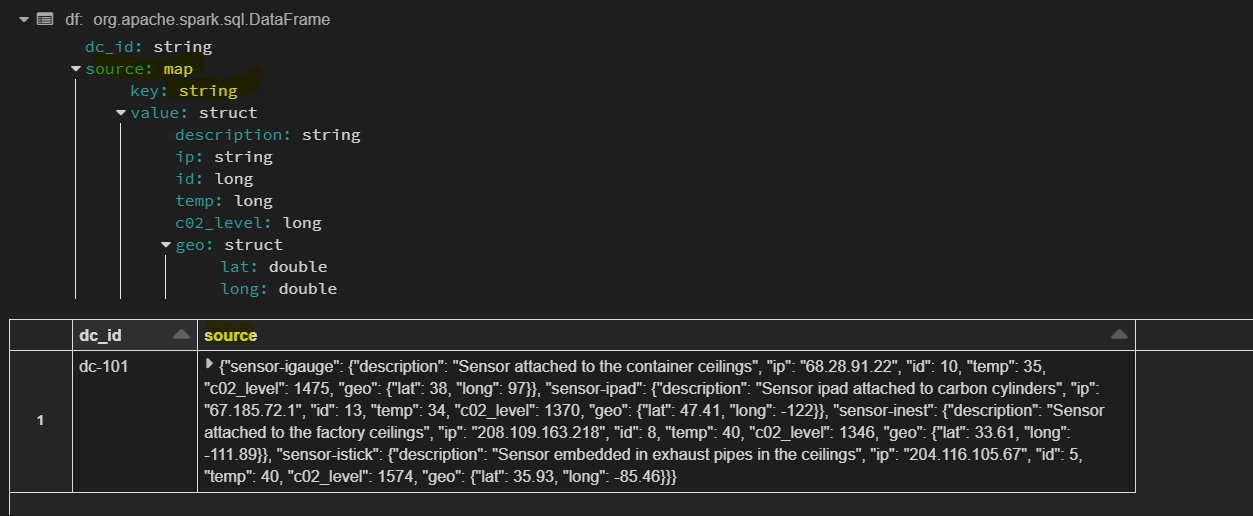

Web if the schema parameter is not specified, this function goes through the input once to determine the input schema. String represents path to the json dataset, or a list of paths, or rdd of strings storing json objects. Web spark sql provides spark.read ().csv (file_name) to read a file or directory of files in csv format into spark dataframe, and dataframe.write ().csv (path) to write to a csv file. Web to read specific json files inside the folder we need to pass the full path of the files comma separated. Code snippets & tips sendsubscribe search. Web # input source folder having multiple json files will result in multiple rows data_sdf = spark.read.option ('multiline', 'true'). In this section, we will see parsing a json string from a text file and convert it to spark dataframe columns using. Note that the file that is offered as a json file is not a typical json file. You can read data from hdfs ( hdfs:// ), s3 ( s3a:// ), as well as the local file system ( file:// ). Web parameters path str, list or rdd. Web pyspark json data source provides multiple options to read files in different options, use multiline option to read json files scattered across multiple. By default, spark considers every record in a json file as a fully. Read and parse a json from a text file. Web spark sql can automatically infer the schema of a json dataset and load it as a dataframe. Web we can have more than 2 threshold and for every threshold it can have 1 or more action.

Web We Can Have More Than 2 Threshold And For Every Threshold It Can Have 1 Or More Action.

If you are reading from a secure s3 bucket be sure to set the following. String represents path to the json dataset, or a list of. String represents path to the json dataset, or a list of paths, or rdd of strings storing json objects. Web spark sql can automatically infer the schema of a json dataset and load it as a dataframe.

Web This Conversion Can Be Done Using Sparksession.read.json () On Either A Dataset [String] , Or A Json File.

Web # input source folder having multiple json files will result in multiple rows data_sdf = spark.read.option ('multiline', 'true'). Note that the file that is offered as a json file is not a typical json file. Read and parse a json from a text file. Code snippets & tips sendsubscribe search.

By Default, Spark Considers Every Record In A Json File As A Fully.

Web to read a csv file you must first create a dataframereader and set a number of options. Lets say the folder has 5 json files but we need to read only 2. This conversion can be done using sparksession.read.json on a json file. Web if the schema parameter is not specified, this function goes through the input once to determine the input schema.

Web Parameters Path Str, List Or Rdd.

Web spark sql provides spark.read ().csv (file_name) to read a file or directory of files in csv format into spark dataframe, and dataframe.write ().csv (path) to write to a csv file. The docs on that method say the options are. Web pyspark json data source provides multiple options to read files in different options, use multiline option to read json files scattered across multiple. You can read data from hdfs ( hdfs:// ), s3 ( s3a:// ), as well as the local file system ( file:// ).